Intro

Welcome to my blog on how to fully automate the deployment of a Self-Hosted Integration Runtime using Terraform!

The title of this blog is very much self-explanatory but I hope you find the contents useful and are able to apply this on your projects in some aspect.

All code presented in this blog can be found in my GIT HUB (DataOpsDon/DataOps (github.com)).

A self-hosted integration runtime in Azure is a component of Azure Data Factory that enables data integration across different systems and environments. It is a program that you install and run on your own on-premises or virtual machine infrastructure (in this case it will be a virtual machine), and it provides secure and efficient communication between your local systems and the Azure, enabling you to transfer data from on-premise systems into the cloud securely.

The code

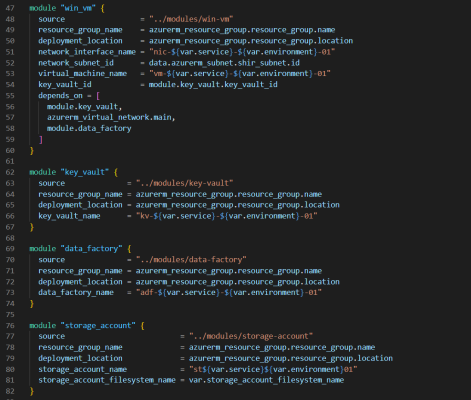

As part of this deployment the following components will be provisioned:

- Data Factory v2

- Key Vault

- Virtual Machine

- Storage Account

- Resource Group

- VNET

In this instance I have created modules for each component and thus creating a composite layer to call these modules and specifying any additional configuration required to get the Self-Hosted integration runtime up and running. As mentioned previously the code for these modules can all be obtained from my GIT-HUB page.

For the purposes of this blog, we are creating a new VNET resource, however it will be likely that there will be an existing Virtual Network for you to deploy your Virtual Machine into, so please make any alterations to the Terraform code to account for this.

Please note that the template used for the virtual machine is only to be used as a base and thus any additional security requirements your organisation requires must be taken into account for.

Now that the modules for the resources have been configured and the parameters have been mapped appropriately we need to start thinking about the creation and installation of the integration runtime. The process method to install the runtime will be as follows:

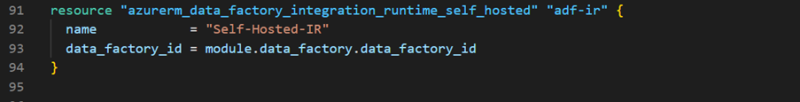

- Create Self-Hosted IR on the Data Factory instance.

- Upload the installation script to the storage account.

- Create a custom extension script on the virtual machine to run the installation script uploaded to the storage account.

To create the SHIR on the Data Factory is very simple and doesn’t require much configuration (see below).

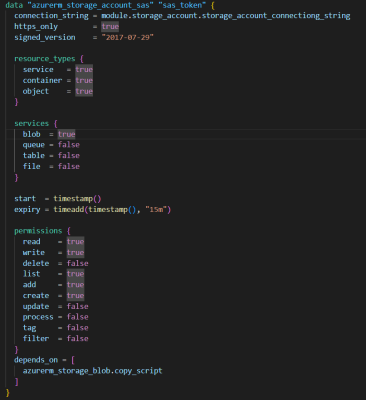

Now that configuration is created to deploy the IR, it is now required to generate a temporary SAS token to allow the Azure Virtual machine to be connect to the Storage Account to run the installation script (this script can be obtained from the MS Quick -Starts page). For this operation I have created some dynamic content to allow the SAS token to only be valid for 15 minutes.

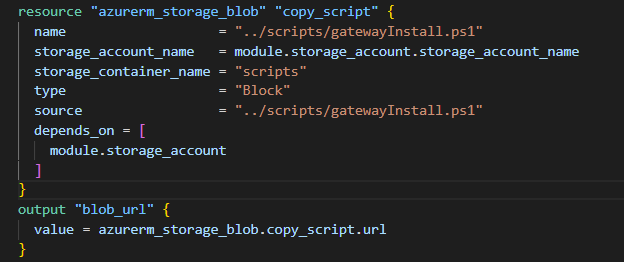

Now we can copy up the script to the storage account using the azurem_storage_blob provider defined in the following code block. Make sure that the blob URL is outputted as defined below.

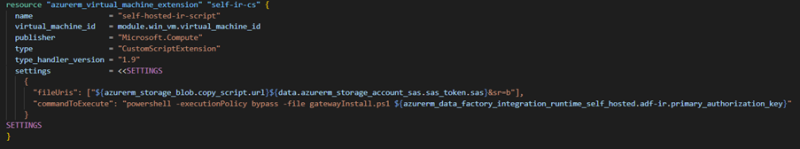

We are at the stage where we can configure the custom extension script for the virtual machine to install the runtime software. The code block below defines how this can be configured.

In this configuration we are defining the properties of this custom extension script, however it can be seen in the settings block there are some dynamic references to outputs of the configurations defined previously.

Firstly for the fileUris we have to reference the SAS token generated to connect the storage account (it is important to add &sr=b at the end of the token)

Secondly in the commandsToExecute we need to reference the PowerShell script uploaded to the storage account and pass in the one argument that is required THE KEY TO THE INTEGRATION RUNTIME.

Once all this has been configured the deployment can be initiated via the usual Terraform Deployment Process:

- Terraform Init

- Terraform Plan

- Terraform Apply

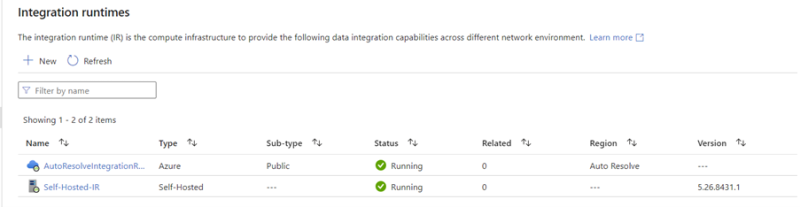

When the deployment has finished and succeeded, login to you Data Factory workspace to see the connected SHIR.

I do hope you have found this blog helpful and if you do have any questions or queries please reach out to me on my Twitter Handle @DataOpsDon